How to prevent the product death cycle

AI gave us a new way of screwing up products.

AI itself isn’t the problem!

However, companies are using AI to make the same old product mistakes faster and with more confidence.

Unpopular opinion, I know, but stay with me for a few minutes!

If you are on LinkedIn a lot like me, you probably already noticed that people in tech quickly emerged as AI product managers, AI product designers, and AI engineers — actually, the last one does make sense.

In the past couple of years, I’ve been watching companies make the same old mistakes with shiny new tools.

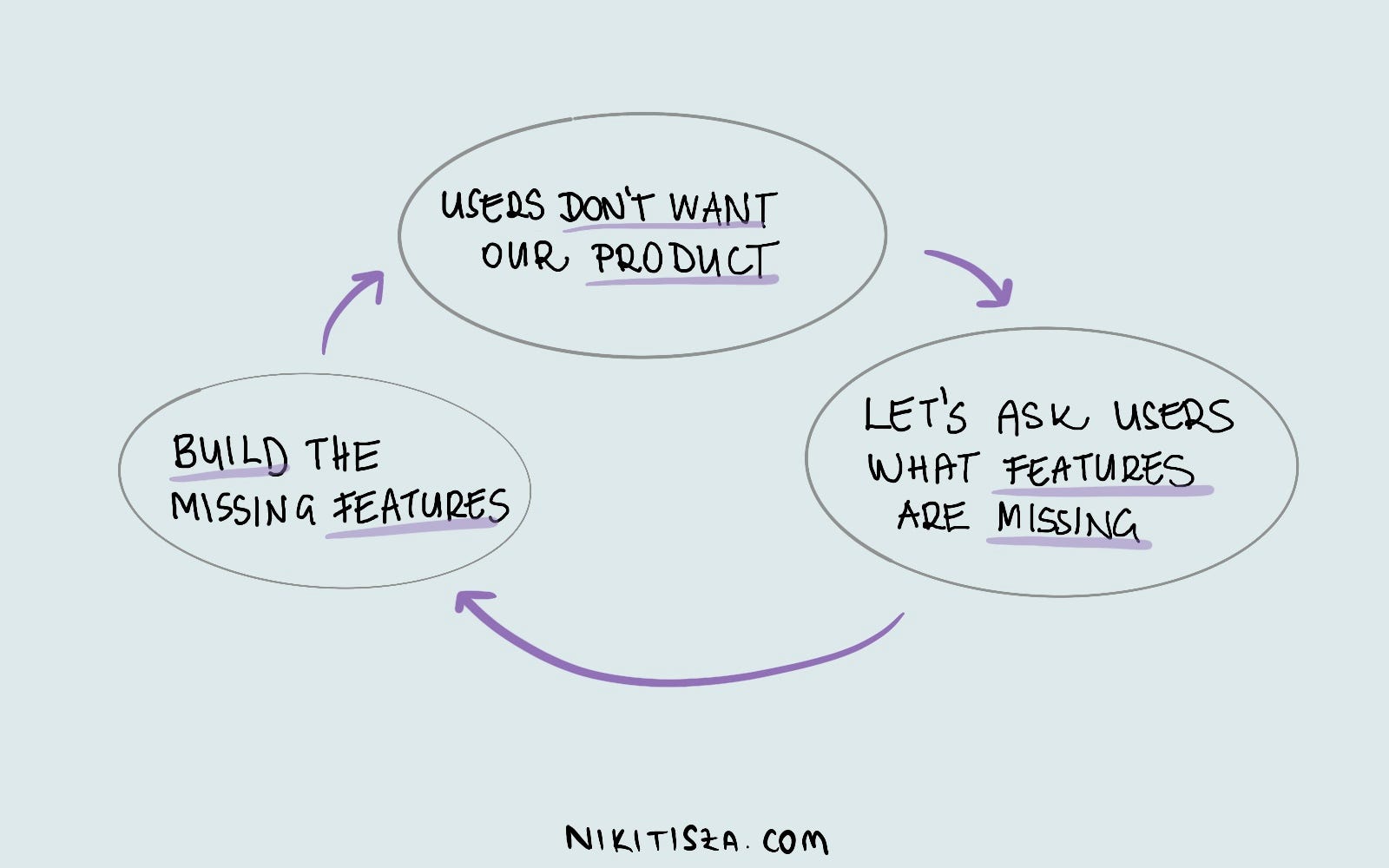

In product development, there’s a well-known Product Death Cycle that perfectly captures how companies waste money:

Now we have an even more dangerous version:

This new cycle feels data-driven and strategic.

It feels smarter. But it’s not!

The old cycle at least involved talking to actual people, even if we asked them the wrong questions. The new one cuts out humans completely. We’re literally asking the computer what the computer should do next.

From a product perspective, AI seems like a dream come true.

It gives us everything we think we want: user personas, product narratives (PRDs), feature lists, prototypes, competitive analysis, market research, go-to-market strategy, pricing, etc. All delivered with complete confidence and perfect formatting in minutes.

It seems product teams aren’t getting insights about real users. Instead, they’re asking AI to write PRDs that sound impressive but lack real data backing them up. And when you ask, ‘Hey, where’s the data supporting this point?’

Well, the answer is crickets.

We’re getting predictions based on internet patterns.

AI is in this weird place right now where leadership thinks it’s magic, but any engineer could tell them it’s just a really good prediction engine.

Companies are under pressure to ship AI features, so they’re using AI to decide what AI features to ship.

It’s a loop that produces technically perfect features and products that solve problems nobody has.

What’s really happening?

Companies are using AI to avoid the hard work of understanding users.

It’s so much easier to ask ChatGPT (or your other favourite AI LLM tool) “what would make our banking platform better?” than to do proper user research.

Why sit through uncomfortable interviews where customers tell you your core assumptions are wrong when you can get a beautiful feature roadmap generated in seconds?

The problem is that our users aren’t patterns in a dataset.

They’re people trying to get specific jobs done, dealing with unique constraints, working in environments that don’t match what the AI thinks they should want.

Here’s what actually works.

Not surveys.

Not focus groups.

Not AI-generated personas.

Actual conversations where you shut up and listen to people describe their real problems.

Ask them to walk you through their current process. Watch them struggle with existing solutions.

Ask them: ‘Compared to all the other things you’re trying to solve right now, where does this problem rank?’

If your amazing AI feature solves their 8th biggest problem, guess what?

Nobody cares how smart your algorithm is.

This ranking exercise cuts through what in research we call ‘focus illusion’ where people overstate the importance of whatever you’re asking about.

It’s like asking someone if they want better coffee in the office. Of course, they’ll say yes.

But when they rank it against getting a raise, fixing their laptop, or having fewer meetings, suddenly having better coffee drops way down the list.

If you have high retention but can’t get new users, you probably don’t have a product problem. You have a marketing or awareness problem.

Stop building features and start telling people why they should care.

If you’re getting tons of new users but they all leave quickly, that’s either a product problem or an engagement problem.

Your onboarding might suck, your core value might be unclear, or you might be attracting the wrong people.

Should your product serve high-end users who need tons of features, or everyday users who want something simple?

Your product can’t be everything to everyone, and AI doesn’t magically solve that fundamental product strategy question.

From my experience leading people through AI projects, the ones that succeeded (or have the potential to succeed) had a few things in common:

They treat(ed) AI as a tool, not a strategy.

Just like we designers wouldn’t design features just because we have Figma, you shouldn’t build AI features just because you have access to ChatGPT and it told you to.

The most pointless AI products I see all work perfectly.

They have great user interfaces, smooth performance, and amazing technical capabilities.

They also solve problems that exist mainly in conference rooms and pitch decks:

They’re all impressive demonstrations of what’s possible, and they’re all gathering dust because nobody has a job they need these tools to do. Most users don’t want another generic chatbot that doesn’t solve their actual problems.

The fundamental work of understanding users hasn’t gotten easier or less important just because we have better technology.

Your product won’t stand out by shipping AI features fast.

Your product will stand out by demonstrating how well you understand what your users are trying to accomplish and why your solution is helping them do it.

We need to focus on value creation rather than churning out new AI-powered features just because that’s what everyone else is doing.

AI can make a lot of things faster and cheaper.

It can help you spot patterns in research data (if you feed AI with support tickets, logs, and interview transcripts), quickly prototype concepts, and analyze user feedback at scale.

But it can’t replace the messy, uncomfortable, essential work of talking to real people about real problems.